Research

My research interests are primarily in the fields of Robotics Grasping and Manipulation. I am interested in designing and integrating robots and tactile sensors to leverage the sense of touch and perform tasks more effectively. I believe robots will be useful in our homes to assist us in our every day tasks.

See a complete list of my publications

IndustReal: Transferring Contact-Rich Assembly Tasks from Simulation to Reality

Robotic assembly is a longstanding challenge, requiring contact-rich interaction and high precision and accuracy. Many applications also require adaptivity to diverse parts, poses, and environments, as well as low cycle times. In other areas of robotics, simulation is a powerful tool to develop algorithms, generate datasets, and train agents. However, simulation has had a more limited impact on assembly. We present IndustReal, a set of algorithms, systems, and tools that solve assembly tasks in simulation with reinforcement learning (RL) and successfully achieve policy transfer to the real world. Specifically, we propose 1) simulation-aware policy updates, 2) signed-distance-field rewards, and 3) sampling-based curricula for robotic RL agents. We use these algorithms to enable robots to solve contact-rich pick, place, and insertion tasks in simulation. We then propose 4) a policy-level action integrator to minimize error at policy deployment time. We build and demonstrate a real-world robotic assembly system that uses the trained policies and action integrator to achieve repeatable performance in the real world. Finally, we present hardware and software tools that allow other researchers to fully reproduce our system and results.

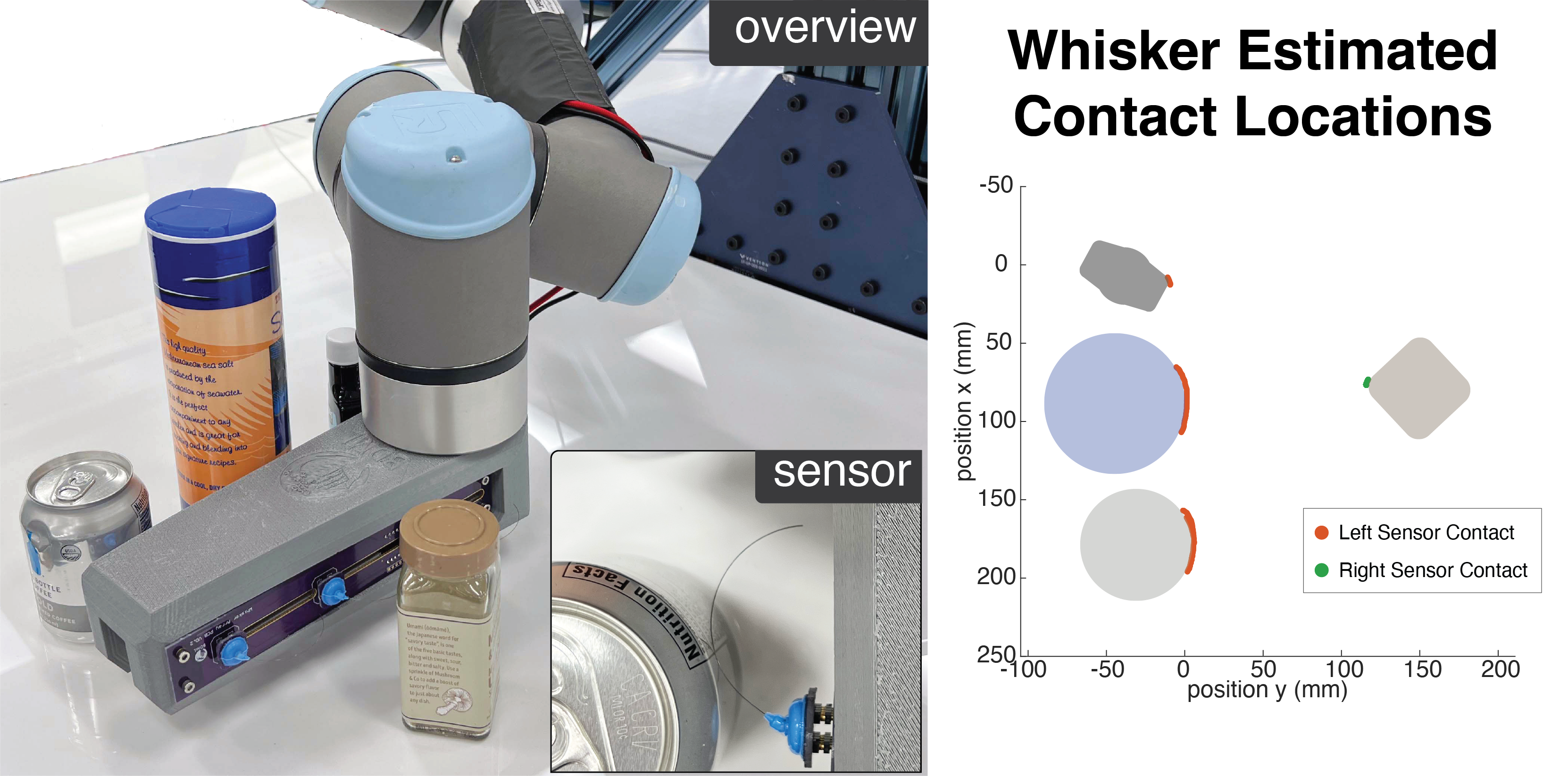

Whisker-Inspired Tactile Sensing for Contact Localization on Robot Manipulators

This work presents the design and modelling of whisker-inspired sensors that attach to the surface of a robot manipulator to sense its surrounding through light contacts. We obtain a sensor model using a calibration process that applies to straight and curved whiskers. We then propose a sensing algorithm using Bayesian filtering to localize contact points. The algorithm combines the accurate proprioceptive sensing of the robot and sensor readings from the deflections of the whiskers. Our results show that our algorithm is able to track contact points with sub-millimeter accuracy, outperforming a baseline method. Finally, we demonstrate our sensor and perception method in a real-world system where a robot moves in between free-standing objects and uses the whisker sensors to track contacts tracing object contours.

Exploratory Hand: Leveraging Safe Contact to Facilitate Manipulation in Cluttered Spaces

We present a new gripper and exploration approach that uses an exploratory finger with very low reflected inertia for probing and grasping objects quickly and safely in unstructured environments. Equipped with sensing and force control, the gripper allows a robot to leverage contact information to accurately estimate object location through a particle filtering algorithm and also grasp objects with location uncertainty based on a contact-first approach. This publication is still under review so it is not yet available.

A Stretchable Tactile Sleeve for Reaching into Cluttered Spaces

A highly conformable stretchable sensory skin made entirely of soft components. The skin uses pneumatic taxels and stretchable channels to conduct pressure signals to off-board MEMs pressure sensors. The skin is able to resolve forces down to 0.01N and responds to vibrations up to 200 Hz. We apply the skin to a 2 degree-of-freedom robotic wrist with intersecting axes for manipulation in constrained spaces, and show that it has sufficient sensitivity and bandwidth to detect the onset of sliding as the robot contacts objects. We demonstrate the skin in object acquisition tasks in a tightly constrained environment for which extraneous contacts are unavoidable.

Previous Work

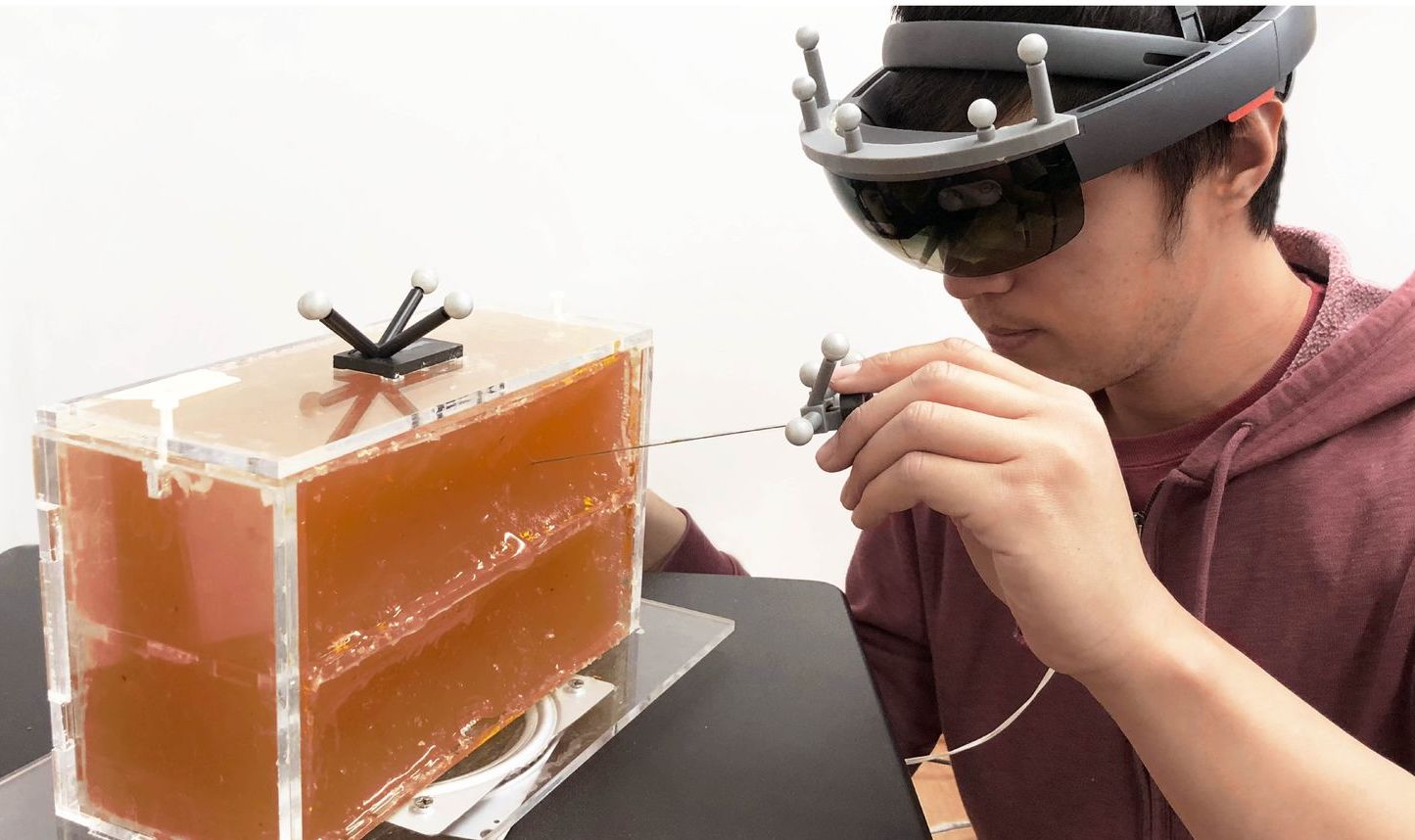

HoloNeedle: Augmented Reality Guidance System for Needle Placement Investigating the Advantages of Three-dimensional Needle Shape Reconstruction

An augmented reality guidance system for needle placement in tissue using needle shape reconstruction and sensing.

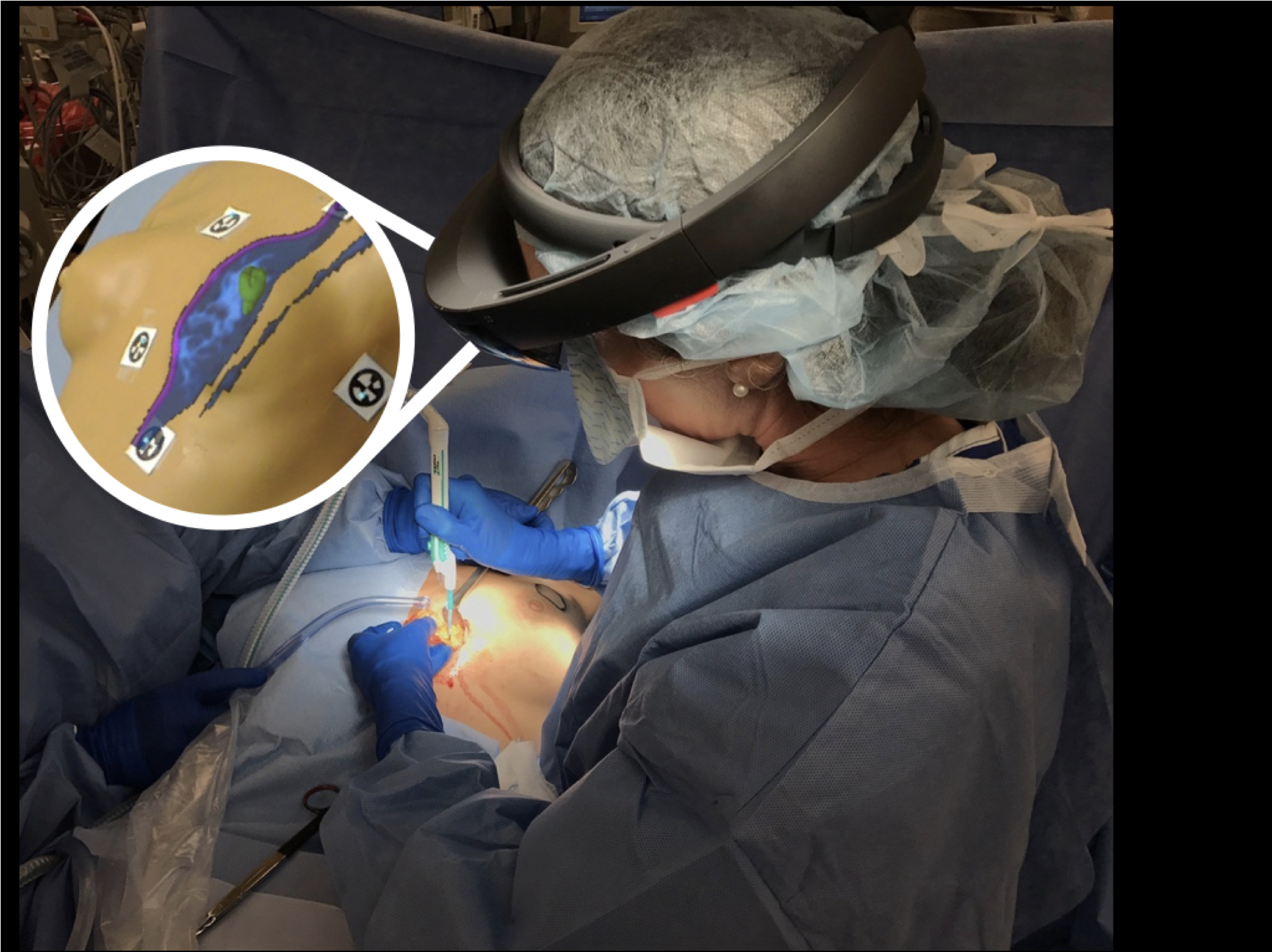

A Mixed-Reality System for Breast Surgical Planning

We have developed a mixed-reality system that projects a 3D “hologram” of images from a breast MRI onto a patient using the Microsoft HoloLens. The goal of this system is to reduce the number of repeated surgeries by improving surgeons’ ability to determine tumor extent. We are conducting a pilot study in patients with palpable tumors that tests a surgeon’s ability to accurately identify the tumor location via mixed-reality visualization during surgical planning

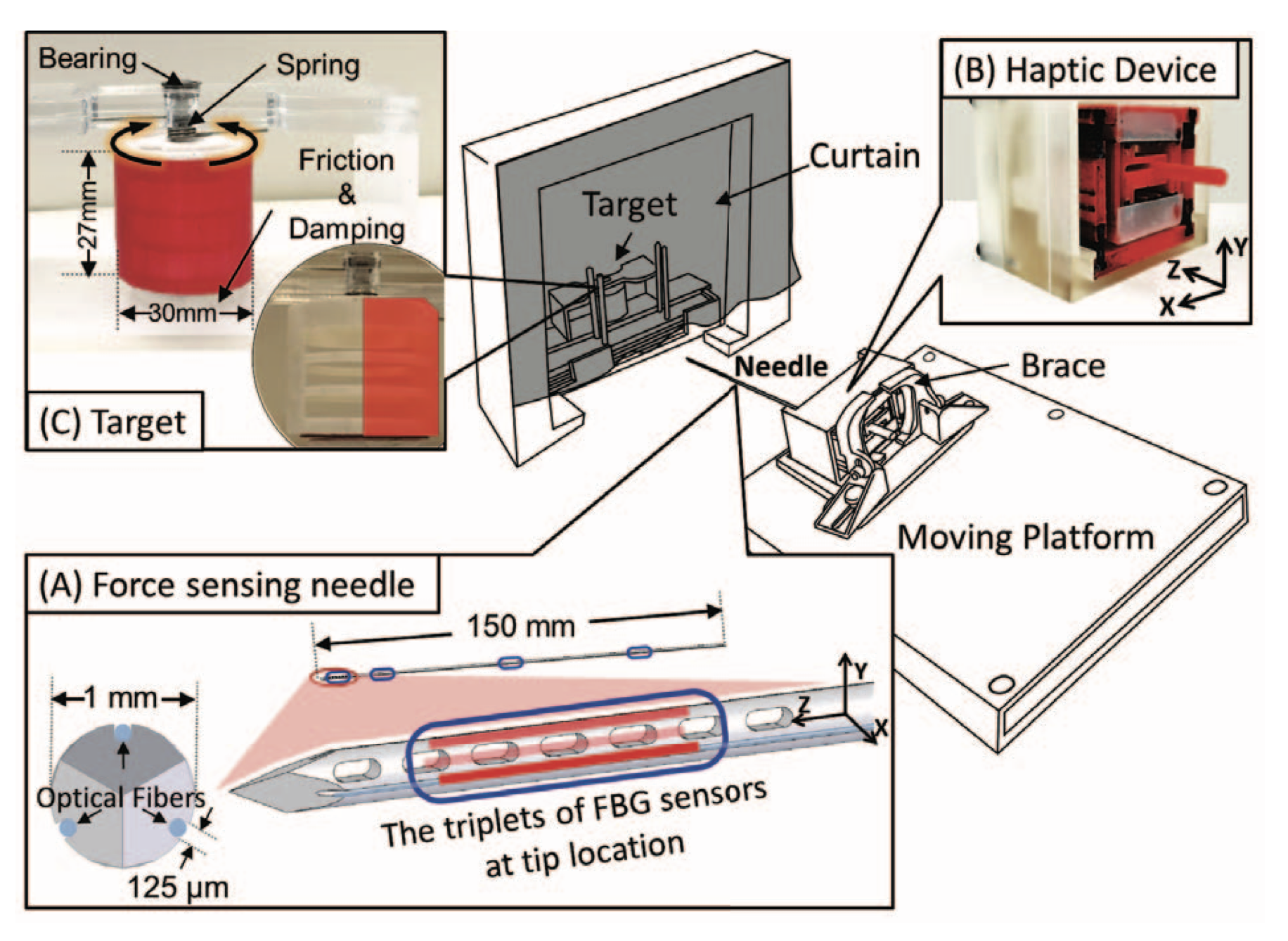

Display of Needle Tip Contact Forces for Steering Guidance

A MR-compatible biopsy needle stylet is instrumented with optical fibers that provide information about contact conditions between the needle tip and organs or hard tissues such as bone or tumors. This information is rendered via a haptic display that uses ultrasonic motors to convey directional cues to users. Lateral haptic cues at the fingertips improve the targeting accuracy and success rate in penetrating a prostate phantom.

The Effect of Manipulator Gripper Stiffness on Teleoperated Task Performance

The absence of environment force sensing in robot-assisted minimally invasive surgery, makes it challenging for surgeons to perform tasks while applying a controlled force to not damage patient tissue. One way to help modulate grip force is to use a passive spring to resist the closing of the master-side gripper of the teleoperated system. To investigate the effect of this spring stiffness we developed a haptic device that can render a programmed gripper stiffnesses. We conducted a study in which subjects used our dvice to teleoperate a Raven II surgical robotic system in a pick-and-place task. We found that increasing the gripper stiffness resulted in reduced forces applied at the slave-side.